Good morning, I have to run several gateways using LTE to get internet connection.

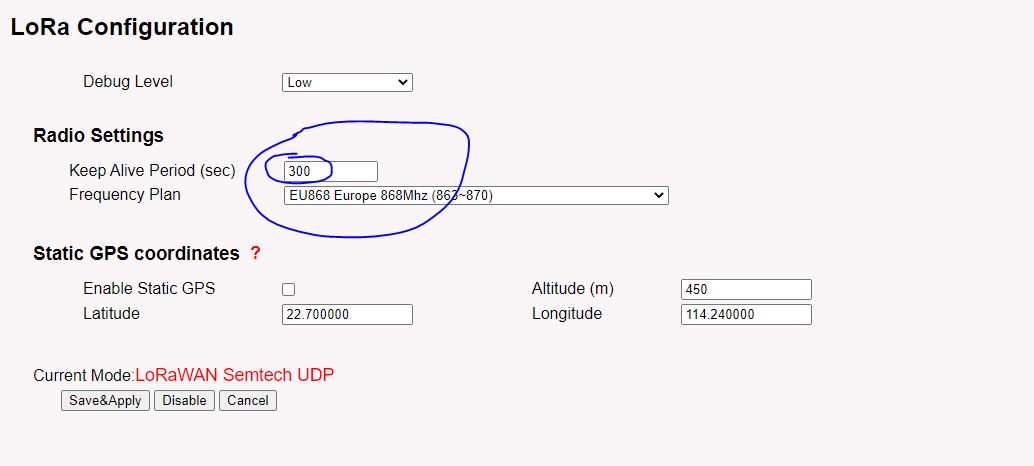

I want to reduce the heartbeat to about 300 second to save traffic on the prepaid LTE card.

Even if I set the keepalive period to 300 second like in the picture I see in the ttn console a status every 30 seconds.

Any hint to get to the result will be appreciated.

You really should leave this alone. The Back End NS needs to know that the GW is ‘live’ and available for sending e.g. join accepts, mac commands, downlinks etc. not only to your own end devices but also to other users in the area and to do that it needs confidence that you GW is alive! Many GW’s allow the period to be changed but more frequently than 30sec not needed and could start to be excessive (I have seen some running with 10s updates!), more than 60sec may start to impair network perfromance and availability/reach so really best left at 30sec default  The volume of data involved should not be excessive per month for a cellular system (I run many myself).

The volume of data involved should not be excessive per month for a cellular system (I run many myself).

updated/corrected text

Last couple of days there was a post from a senior TTI team member that said more than 60s caused issues - nothing really bad, but it was outside their expectations.

Also, is that really the stat update time - it’s on the LoRa page - seems a bit of an odd place for what is a packet forwarder config.

Thanks, for you reply. I understood the matter.

Not a lot of traffic but I am planning 100 of gateways, reduce the cost of a factor 10 make a big difference after 5 years. I will look for dedicated post. (currently I have a datavolume around 40Mb per month per gateway)

Just found weird that even if I set to a number higher than 30 it keep it 30 and no advice is shown. ( and I could not find anything in the documentation) Manufacture matter then.

My bad that should have read more than 60sec (I used opposite of the more frequent than 10s! Will correct post incase found by others and this post then explains correction! Updates in range 10s to 60s = ![]()

…will get to grips with English and correct grammar and sense one day!

Hi Jeff,

I have contacted Dragino: indeed there was a bug in the firmware about the Kee alive period and It was fixed to 30 seconds.

Dragino send me a upgraded firmware to correct this.

However I will keep it below 60 as recommended.

Maybe not a lot of traffic from your nodes, but keep in mind that this is the TTN forum and TTN gateways are by definition available for the use of everyone’s nodes, not just those deployed by the person or organization who deployed the gateway.

Besides, the gateway doesn’t even have anyway to know who a node belongs to, or that an apparent signal is even valid LoRaWan traffic in more than the crudest sense of overall shape. A gateway’s job is just to pass on whatever it hears, only at the network server level does there start to be an effort to make sense of it.

Undersize your backhaul purchase and you could be in for a nasty surprise if someone else deploys nodes in your vicinity - legitimate TTN nodes, nodes sending traffic to their own private-network gateways that also gets picked up by your TTN gateways, other LoRa uses like smart meters, buggy commercial nodes, misconfigured science fair projects…

The actual rate of a traffic which an 8 or 16 decoder gateway can product is fairly limited in Internet terms, but don’t assume that it will always be the trickle you see during initial, isolated testing.

true what you say. I am aware of it.

I will welcome thepoint of time when traffic generate by nodes will be higher than the heartbeat genarated traffic. At this point TTN will be full successfully deployed, So far the I have to keep alive the gateway every 30 secon with a few messages every hour from nodes.

5 to 120 ratio nodes/gateway (including welcomed “foreign” traffic)

BR

That status message probably amounts to around 25 megabytes a month.

Particularly when actual traffic is as sparse as a few messages an hour, getting the status is useful to know that the gateway is continuously connected, vs the its backhaul connection frequently going up and down such that it might not be online for one of those sparse uplinks (eg, something you’d want to look at if you start seeing gaps in the uplink frame counter progression)

Granted, TTN may not expose that status information to you all that well, personally I’d add my own custom status reporting to my own infrastructure (and reverse SSH or VPN maintenance tunnel, too).

This topic was automatically closed 24 hours after the last reply. New replies are no longer allowed.