In the Meetjestad project, we have a basic environmental measurement platform going, with just over a hundred nodes deployed in the field, using TTN for our datatransfer. We have a basic data storage in place now, but we’re running into problems in terms of scalability an flexibility. We have some ideas on how to fix this (details below), but before starting to reinventing our own set of wheels, we’re wondering: Is anyone else also running into these same problems? How are others solving their data processing and storage needs? Are there existing platforms that would handle (part of) our needs? Would a solution like we describe be interesting to others too?

Current situation

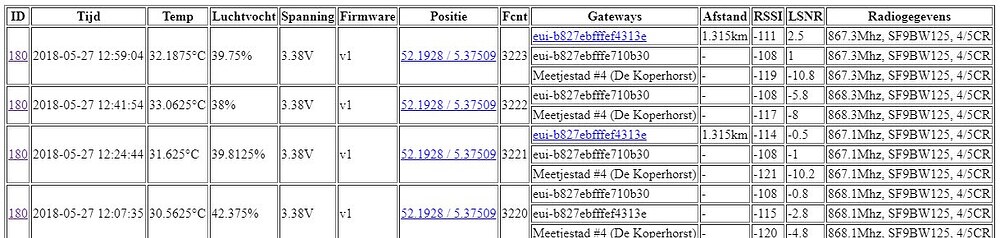

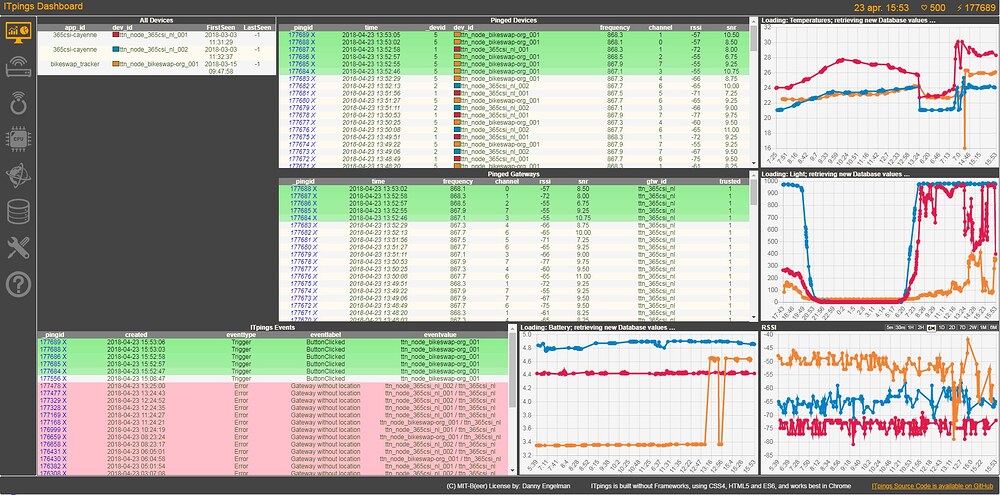

We have a number of nodes running in the field, measurement temperature and humidity. These send their data to TTN, which is read by a custom python script, which decodes the packets and writes it to our database. From there, we have a number of PHP scripts that make the data available online.

Problems

- When we experiment with different boards, sensors, etc. we need to change the packet format. Currently, this means also changing the python forwarder script, create a database table, and data export scripts. This is problematic, because:

- This requires administrator access to the server, which means involving one of a few people within our organisation that have access.

- This requires a fairly advanced and broad skillset.

- Other communities are interested in reusing our designs and systems. The simplest version is to just copy our designs, hardware and server backend, but that requires quite some investment on their part. Alternatively, they can use our systems as-is, but then they have not flexibility in customizing the data collected.

- Ideally, they could get started with our hardware and systems right away, doing all the needed customization through well-defined interfaces (either in the firmware on the node itself, or through some web interface).

Intended solution

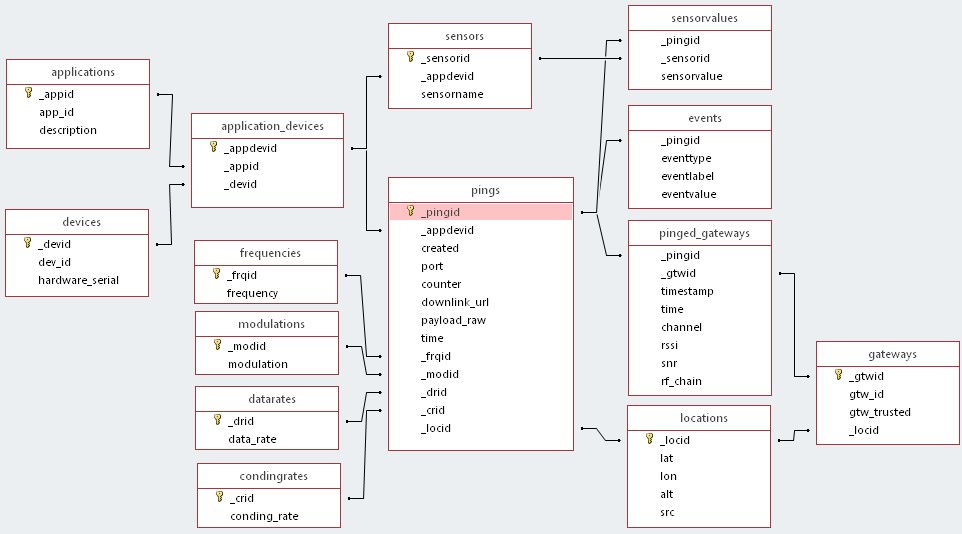

- Build a generic data collection system, where (in short) a node sends a metadata packet on startup that describes the data that will be sent in subsequent packets.

- This metadata packet defines a numer of channels/variables that will be sent, along with metadata about these variables (measured quantity, sensor type, etc.).

- On the server side, all data is stored an can be flexibly grouped, downloaded, processed, etc.

- Additionally, the owner / maintainer of a node should be able to adapt metadata about the node (e.g. what enclosure is it in? Is it in a shady or sunny spot? If it is on a wall, what direction does it face?

- Additionally, data should be stored in a flexible way, that allows processing of data, and reprocessing older data again (e.g. with updated ideas of what constitutes invalid data)