I did read the linked thread, but i can’t see, what solution you found?

The solution is that they need to upgrade the firmware. MXOS version in their clould change from 0.0.3 or like that to 0.0.4. I suppose that is some kind of firmware versioning.

If they didn’t already try to write in they community board that you have the same problem. I think then it’ll be fixed/updates in a few minutes.

i posted it on their support board, now let’s see what happenes. On Twitter they guess that the 4,58 seconds downlink delay of TTN backend is deliberately to close out certain brands/types of gateways. Hmm.

Anyone reading here who can explain the purpose of the long downlink delay of TTN’s servers?

All by design:

And to allow TTN to use the same gateway for downlinks that are to be sent earlier, TTN cannot send messages to a gateway until they’re almost due.

So far understood. But why did the timing suddenly stop to work with the MatchX Gateways, after it worked without problems for several weeks? Was there a change in the TTN backend in the past days/weeks?

IMHO this is the worse design decision TTN ever made. It’s even worse when using TTN’s own protocol (more latency than UDP)

Which gateways really don’t have enough memory to queue sufficient downlinks?

Hmmm, I read that would in fact support scheduling on the gateway, but maybe not (yet):

Also, I made up my own reasoning for this:

I’m not sure any of those reasons are enough to justify such a short interval, I think packets arrive with less than 100ms to spare.

This has caused numerous problems with slower links and personally I’ve moved customers to private server setups as a solution - those networks are not part of public TTN because of this.

Just for the sake of completeness, I wonder how much sooner TTN could schedule RX1:

And TTN surely prefers RX1 over RX2:

And github.com/TheThingsNetwork/packet_forwarder/blob/master/docs/IMPLEMENTATION/DOWNLINKS.md explains gateways might need some 100ms themselves as well.

Sure application downlinks have tight scheduling constraints if they are to meet RX1 but I’m not even talking about those, and from what gather neither is most of this topic: it’s about OTAA accept responses which are generated within the core in NetworkServer.

I’ve seen those comments in DOWNLINKS.md but it’s a really shame that whole repository has been put on hold already for many months. I’m not sure what’s going on with that anymore.

I’m very curious to see how a “fresh” TTN protocol implementation like the one on TTN’s own gateway reacts.

Every gateway running older packet forwarders like the stock basic/gps forwarder or early versions of poly forwarder because those versions of the software do not implement JIT queuing and send every packet received from the back-end to hardware which has no queue. So the last packet to arrive would be transmitted and earlier packets lost.

I’ve been told there should be a feature request allowing to specify the delay for RX1 for a node. Hopefully that will be implemented in the new back-end because the current window is far too small for any useful application processing (when combined with all other delays)

They suffer the same delay last time I checked, the reason I was told is the back-end does not distinguish between the two types of gateways. (semtech or ttn-gateway-connector protocol)

Wow, they are really hostile on Twitter. For me, a supplier accusing others in the open and then asking people to send an email if they want proof, would quickly drop from my possible supplier list, but well…

(This forum shows timing has always been tedious with TTN and now MatchX claims “a sudden deceitful 4.6s change”?)

yes, the communication is terrible. I don’t know why the react in this assaulting manner. But the people behind there seem technically experienced.

For me they don’t seem experienced since they obviously didn’t know which code they are using. The directed me to a source link which has a 30msec delay. That was a lie! Later they just say they changed it to 500msec and without explaining why they did so.

Yes I tried explaining to them on twitter that this affects all gateways I’ve tried but they don’t appear to be the most reasonable people on this issue.

I prefer a Hanlon’s razor approach to it but have to say there has been a running rumour that TTN protocol / TTN gateways would have a “preferred” path, even before MatchX took to twitter about it.

So far in my tests TTN protocol has actually had worse delay, likely because of all the overhead (can’t compete with simple JSON over UDP)

There have not been any changes in the scheduling logic since we doubled the time-before-tx on March 10 2016 (1bb19bf818). After reading the discussion here (and on Twitter) I just added another 200ms (c8bd97b135) to increase support for slow connections (or slow gateways). I’ll try to have that change deployed later today.

This is definitely not true. In the future we may give application owners the option to prefer authenticated/secure gateways (using MQTT or gRPC over TLS) over unauthenticated/insecure gateways (using JSON over UDP), but that won’t be enabled by default.

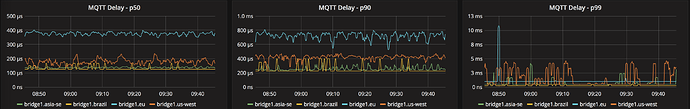

The MQTT server in between indeed adds a bit of delay. We’re working hard on reducing this by optimizing our MQTT server. Right now the extra delay is a few milliseconds at worst.

Of course TCP may also add some extra delay on bad connections (TCP packets are retransmitted, whereas UDP packets would simply get lost)

Wow this is like the best Xmas present from TTN I could ever hope (yes even better than the TTN gateway ![]() Thank you so much @htdvisser

Thank you so much @htdvisser

Yes I think much of the overhead is on packet generation on the client side. But very good to know you’re on top of the server!

I still have the “too late” error with the MatchX Gateway.

Fri Dec 22 12:24:29 2017 daemon.info syslog[1428]:

INFO: Received pkt from mote: D000742B (fcnt=46037)

Fri Dec 22 12:24:29 2017 daemon.info syslog[1428]:

JSON up: {“rxpk”:[{“tmst”:2204454315,“time”:“2017-12-22T12:24:28.961510Z”,“tmms”:1197980687961,“chan”:2,“rfch”:1,“freq”:868.500000,“stat”:1,“modu”:“LORA”,“datr”:“SF7BW125”,“codr”:“4/5”,“lsnr”:7.0,“rssi”:-47,“size”:23,“data”:“ACt0ANB+1bNwA+EbAAujBACLA2K81Hg=”}]}

Fri Dec 22 12:24:30 2017 daemon.info syslog[1428]: INFO: [down] PULL_ACK received in 49 ms

Fri Dec 22 12:24:34 2017 daemon.info syslog[1428]: INFO: [down] PULL_RESP received - token[182:216] : )

Fri Dec 22 12:24:34 2017 daemon.info syslog[1428]:

JSON down: {“txpk”:{“imme”:false,“tmst”:2209454315,“freq”:868.5,“rfch”:0,“powe”:14,“modu”:“LORA”,“datr”:“SF7BW125”,“codr”:“4/5”,“ipol”:true,“size”:33,“ncrc”:true,“data”:“IHrmg/62xh41zRa0XrvdYkBYcgohzAzbMejsCECNSLGH”}}

Fri Dec 22 12:24:34 2017 daemon.debug syslog[1428]: src/jitqueue.c:233:jit_enqueue(): ERROR: Packet REJECTED, already too late to send it (current=2208964645, packet=2209454315, type=0)

Fri Dec 22 12:24:34 2017 daemon.info syslog[1428]: ERROR: Packet REJECTED (jit error=1)

Fri Dec 22 12:24:40 2017 daemon.info syslog[1428]: INFO: [down] PULL_ACK received in 49 ms

What firmware version is shown in the MatchX cloud interface for your gateway? I think that runs the old firmware.

Is there any way for people to see which backend version is active?

(I don’t think Releases · TheThingsArchive/ttn · GitHub tells us?)