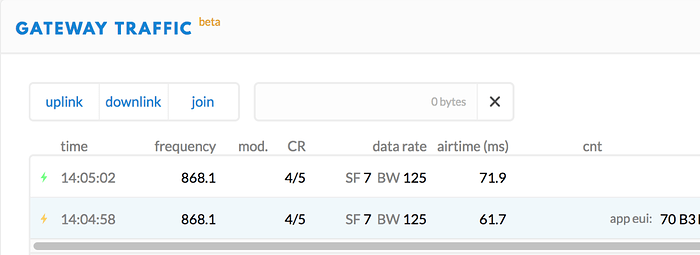

You should also see the Join Accept in TTN Console, in the gateway’s Traffic page (green icon). Not seeing that might indicate you did not copy the keys correctly; beware of MSB and LSB: Hex, lsb or msb? And click the Join Request (yellow/orange icon) to see if there’s any errors, such as OTAA shows "Activation DevNonce not valid: already used".

It seems old screenshots like below are no longer valid? Today, I do not see the orange Join Request icon in the gateway’s Traffic page; only the green Join Accept shows there. As usual, the Application’s Data page only shows the orange Join Request Activation. Not sure if that shows there if the keys are invalid, as then TTN might not even know to which application a device belongs:

(Update: one day later, I’m seeing both the Join Request and Join Accept in gateway Traffic for a Kerlink gateway, using the old packet forwarder. But for another node on a different location I still only get the Join Accept in the Traffic of a The Things Gateway.)