Hello! I think, i have bricked my RS186. I try to update the FW of my RS186 using BT. And it failed !

Now when I start the node, I have two led on and… nothing more

what to do?

Contact Laird support through their website.

No help or no response?

isn’t that the same problem as discussed here and mentioned before… Laird is investigating ?

Hi, I have the RS1xx running for some time now mounted outside. While the temperature measurement seems quite accurate, I am noticing the humidity is not in conditions of > 80%.

The RS1xx is using the Si7021 sensor and I happened to have the same sensor on a break-out board, so I wanted to compare things a bit. I went connect it with an Arduino MKRWAN1300 and put it outside next to the Sentrius.

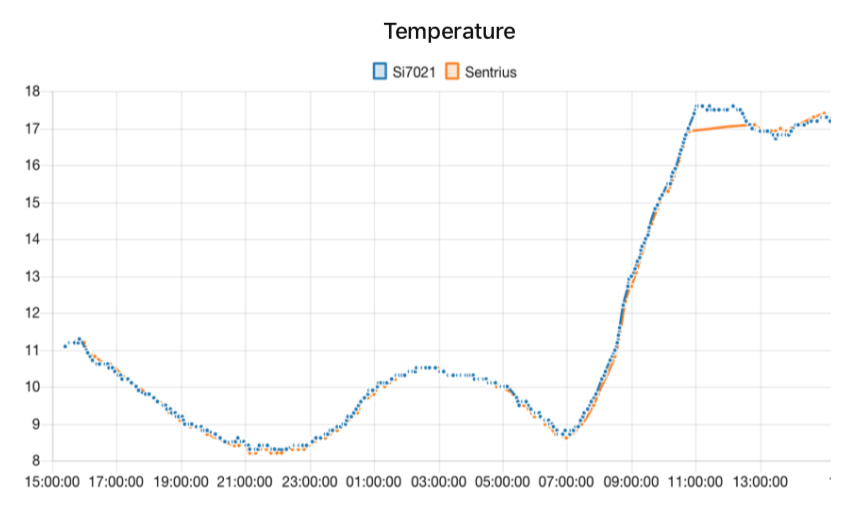

The temperatures are almost equal:

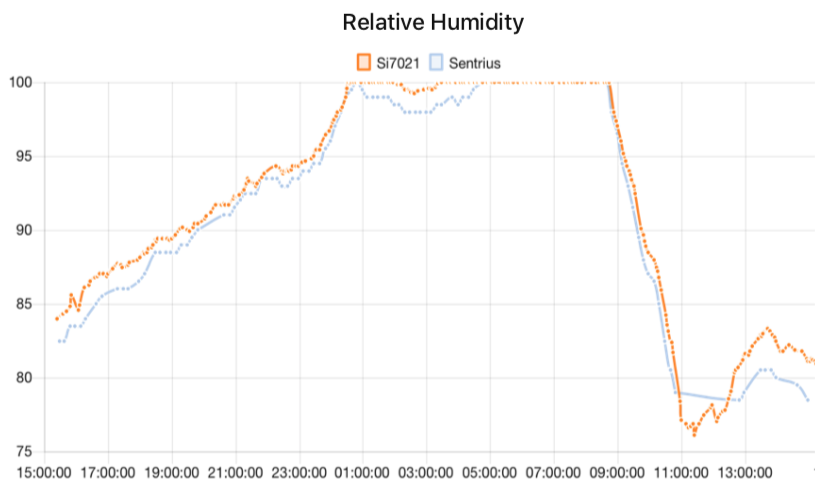

But also the humidity measure are (unfortunately):

Those measures are from last night where we had no rain or even fog, despite that getting 100% humidity, which can’t be true. I saw the same on other days where my primitive weather station measured between 80-90% humidity.

Posting this to understand if anyone else experienced the same. The RS1xx seems to be a very well made device and would have loved to use it for some project if the measures were reliable.

Note its Relative Humidity vs Humidity! So for a given temp there is a ‘dew point’ at which water will condense out - dew will form. At a guess I would say if you had a cold surface outside at the time - say a car roof or window/glasshouse etc., likley you would have seen condensate forming from around <9pm through midnight (100%RH) and onwards through the morning till it started to warm up agiain.

Any variance/lag to the two plots likely due to the white gortex like covering over the RS1xx sensor vs what you have on the bare 7021  Plus device to device variance. As you note very consistent and I would concur with you from my own experience that the RS1xx is well made and reliable - I have a number deployed and have recommended/sold a few to others.

Plus device to device variance. As you note very consistent and I would concur with you from my own experience that the RS1xx is well made and reliable - I have a number deployed and have recommended/sold a few to others.

Thanks for your reply, considering the dew point is a valid point of course. The Si7021 is not the first sensor I am testing and do not remember having seen similar behavior with the others, therefore my (eventually wrong) assumption was, the sensors would no be covered by condensate like glass or a metal surface is. Anyway, I am thinking to fetch some data from the next official weather station nearby in order to compare bit more in detail against the dew point - but that’s bit off topic here.

I had no trouble setting up an RS191 temp/humidity sensor with The Things Industries - not sure how I wound up with an account there instead of here, but no matter.

The sensor is Rev 17 firmware v7.0 21-6-11, on US 915 LoRa.

When I added the Device I just accepted the defaults Rev 4 and firmware v6.1_20_6_16. I added it from the library and the default payload decoder seems to work fine.

I did not change the communication method in the sensor, it is still set to “Laird”. Every message from the sensor has “Sensor request for server time” set. I’m looking for the JSON or something more clever to set that.

My “big” question is how to increase Tx power for more range? Right now, I assume its sending without verification?

I took it home last night (7km) and no data until I brought it back this morning.

Thanks in advance.

1st thing First, if you take your time, use forum search and look back at historic posts around this device (I use lots!) you will see that it supports 3 modes of operation wrt payload 1) Laird only, 2) Laird unfonfirmed 3) Cayenne/LPP. (1) requires confirmed messages - fine in a private network and in its intended market e.g. cold chain/supply monitoring, not ok on TTN as it end up breaching FUP in short order. Recommend use LPP or (2) - even then under (2) it will still request a confirmed message - IIRC every 10 or 20 messages - as part of its watchdog/network monitoring processes - triggering a reboot/rejoin if it thinks it has lost its service to the LNS. This may also then limit your messaging rate depending on what deployment and associated signal quality (essesntially SF) you are able to use.

Again default I think is max Tx pwer for any given territory - and you dont want to crank it up too high if not needed as remember…its Long Range! - so ever poor souls GW in range will hear you, best use ADR to manage SF and eventually TX pwr down to optimal levels.

Not unusual - remember you will likely need LOS unless able to benefit from reflections, knife edge effects, very short ground or structural absorbers and will depend on specific freznel zone characteristics for any given deployment location combination for node & gw. What lies between your home and the GW location?

For What? LoRaWAN Specification? - in which case it will likely be 1.0.4? That may be ambitious try 1.0.3 or even 1.0.2b

Again IIRC I think the device askes for a time stamp when running Laird payload and needs that to start effective operation - hence asking for it each time…few systems are set up to provide this but once answered it should go away - but you are still left with the other Laird mode issues. My recommendation - go LPP - use uplink LPP decoder in the device console and it all ‘just works’! ![]()

Hi Jeff, thanks for the speedy reply! I found this page when I tried to find info on this device, and how to increase Tx power.

Sorry my post was not clear. When one adds a Laird device to The Things Industries, one enters the model (RS191) and then it asks you to select the Hardware Version and Firmware version. But it only lists HW Rev 4, mine is HW Rev 17. And firmware 6.1_20_6_16, mine is actually firmware 7.0 21-6-11.

I just noticed the Things Industries system defaulted to LoRaWAN version 1.0.2. I was totally oblivious to that.

My house is higher elevation, but lots of buildings between us.

The communication choices have changed a little. Using the App I have these choices:

- Laird with confirmed packets (claims its default)

- Laird without confirmed packets

- Cayenne with or without confirmed packets.

- Laird2 with or without confirmed packets.

I still do not understand how one changes the Tx power. Its read only in their App.

Sure believe (2) added later - some of my deployments of these go back to 2018! Not all have been updated with latest fw - if it ain’t broke… ![]()

Why would you want to try? given

at any given SF until down to SF7 when ADR will start dialing Tx pwr also if presented with a strong good quality RF link…Also you need to look at the spec for the underlying RM1xx module these units are based on…to check what max o/p may be.

I may need to fire up the App on phone and look at details again ![]()

![]()

BTW if you see issues you can always set up manually rather than use the repository - by its nature this may not cover ever rev of h/w or firmware (a huge task given number of devices, vendors and variants dpending on how proactive the vendor is in supplying info/updates/historic settings input, etc) so can introduce wrinkles… All mine are manual set as hw rev never seemed to match when I wanted to deploy a new one! ![]()

I’m very lazy. So I tried the defaults first. Had they not worked, I would have deleted and gone full manual setup.

I need to go read the power level descriptions meanings, I thought I understood them, but based on your post now I’m not so sure.

I have assumed, since I did the initial setup in my office with a Dragino LPS8-N gateway (and a LHT65 sensor too) that the automatic DR system would have dialed down the Tx power. So when I when I took them both home (RS191 and LHT65) they were using low power.

I’ll be back after I RTFM…

Nope, I believe that only happens when it can’t reduce SF any further (SF7 on TTN) - each SF step can be thought of as a ‘digital’ gain change of approx 2.5db, so going from say SF9 to SF8 is approx same in link budget terms as staying on SF9 and turning down Tx by 2.5db…by stepping SF the device doesnt drop Tx power but rather reduces time on air by approx 2x - from overall device perspective this has greater overall power reduction - and hence improved battery life - than just dialing down Tx, 'cause well physics ![]() Once at SF7 the next way to save energy is cut Tx power. Overall system headroom for what is considered a good signal is assessed by the back end across many uplinks - typically 20/22 and with a globally set (by LNS service) headroom threshold - think on TTN it is a rather conserative value (8? 15?) but on private networks this can be adjusted to reflect tolerance for missed data… Note also stepping SF also changes noise tolerance - impacting viable SNR levels - where SF12 may tolerate say -20db SNR, SF7 might only tolerate say -7SNR, so if in a noisy environment the system might command a higher SF than would be suggested by just RSSI values in order to accommodate SNR headroom needs also…it becomes a 3D puzzle and just having a user make arbritary changes can lead to poorer device and, at the macro level, network optimisation wrt device capacity and message reliability.

Once at SF7 the next way to save energy is cut Tx power. Overall system headroom for what is considered a good signal is assessed by the back end across many uplinks - typically 20/22 and with a globally set (by LNS service) headroom threshold - think on TTN it is a rather conserative value (8? 15?) but on private networks this can be adjusted to reflect tolerance for missed data… Note also stepping SF also changes noise tolerance - impacting viable SNR levels - where SF12 may tolerate say -20db SNR, SF7 might only tolerate say -7SNR, so if in a noisy environment the system might command a higher SF than would be suggested by just RSSI values in order to accommodate SNR headroom needs also…it becomes a 3D puzzle and just having a user make arbritary changes can lead to poorer device and, at the macro level, network optimisation wrt device capacity and message reliability.

This is correct: Adaptive Data Rate | The Things Stack for LoRaWAN

It’s good to know, but part of the specification of the LoRaWAN is about leaving it to the server to find the best rate.

From your perspective, there’s no dial to move to increase power to increase range - not without potentially acquiring matching silver bracelets & a nice young person in blue to supervise you.

Thats part of the info you need, you then need to know what the legal power limit is for for your part of the World.

America ?

As I said in the first post, US 915 region. I am in Canada - Ontario, not far from Toronto.

Oh man. The Spec is tedious reading, as specs are. Still, the concepts and intent is clear but making my brain hurt!

Ok. I get it now, unlike my previous experience with “simple” RF systems where the Tx power is directly proportional to range, this system is far more nuanced. Indeed, as you said, it tends to use full Tx power all the time. Using the spectrum spread and bit-rates to extend range. Although, under certain special circumstances the system may determine the Tx power should be reduced that is rare. And certainly no likely to affect me.

So now I want to figure out how long it takes to “fix” a signal loss problem. And I’m doing some practical tests starting at a closer range. Today, I put the Laird sensor in my car, and parked in the far end of the plaza across the street. About 500m.

In the office, I was getting:

“rssi”: -42,

“channel_rssi”: -42,

“snr”: 13.5,

“frequency_offset”: “-1929”,

“bandwidth”: 125000,

“spreading_factor”: 7,

“coding_rate”: “4/5”

And I have more reading to do before I understand all of these numbers’ meanings.

500 m away and I get:

“rssi”: -96,

“channel_rssi”: -96,

“snr”: 5,

“frequency_offset”: “1505”,

“bandwidth”: 125000,

“spreading_factor”: 7,

“coding_rate”: “4/5”

I see there is two sets of numbers in the event details. I think the gateway is reporting something slightly different. Same RSSIs, but they mention a “frequency offset” and “channel index”? like:

"rssi": -96,

"channel_rssi": -96,

"snr": 5,

"frequency_offset": "1505",

"channel_index": 4,

sigh. more reading…

Not sure what you are now trying to achieve? Those numbers look about and what might be expected depending on RF & Built environment around you etc…

What problem are you trying to solve now? Looks like you have confirmed the broad outline I gave you so unless you are trying to ‘break’ something…? ![]()

how far can I go?