Hi folks, I am trying to debug a stack that is having trouble receiving downlinks.

It would be helpful to know how often the Rx2 window is used - can I expect almost every downlink to be delivered in the Rx1 window, or is it really all over the place?

tx2 is only used if you missed rx1 window

dropped the packet

from the documentation

.

https://www.thethingsindustries.com/docs/reference/lbs-implementation-guide/implementation-details/#downlink-windows

Downlink Windows

LoRa Basics™ Station allows the server to provide both the RX1 and RX2 parameters of the downlink message, dnmsg. This is so that the gateway can retry downlinks in RX2, if it failed in RX1. The Things Stack however only sets the RX1 values (RX1DR and RX1Freq), thereby forcing the gateway to schedule only once. Errors in scheduling and subsequent retries are handled in The Things Stack and not on the gateway. If The Things Stack wants to force the gateway to schedule in the RX2 window, it sends the RX2 parameters in the same RX1 fields of the dnmsg but offsets the downlink time (xtime) to make the gateway schedule in the correct window.

Custom implementations of LoRa Basics™ Station working with The Things Stack only need to schedule downlink messages that are sent by the The Things Stack and let the latter handle retries and conflicts.

Your quote is only applicable for basic station and most gateways still run the older UDP based packet forwarder or the TTN MQTT protocol based one. And even then it states that the stack always determines which window to use and it just (ab)uses the RX1 window parameters,

TTN schedules downlinks based on available ‘capacity’ in an RX window for the available gateways. It used to be that all uplinks with SF10 and up were answered in RX2 using SF9 and all uplinks SF9 and below in RX1 to optimize transmission times for the gateway. I am not sure what the current algorithm is, being able to choose which SF to use for RX2 complicates matters, I would need to check the stack code for that.

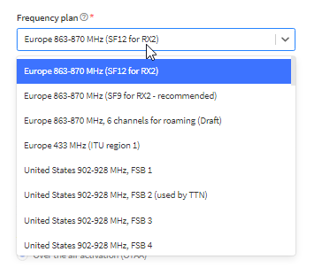

when you set your node up you in the console chose rx2 to be sf9 or sf12

i am specifically referring to eu here other regions there are different settings

Thanks for the insight! So you would expect that after an uplink @ SF7, downlinks are always sent in Rx1 window @ SF7? Or do you have an estimation from experience for how often Rx2 is used?

If this is purely in the context of a device stack for use on TTN/TTS then the guidance/advice given is as may be expected and should be valid, however, different LNS implementations and different LW Service Providers may well follow different paths, driven by options and interpretations of the L-A specification(s) so if looking to offer as a ‘LoRaWAN’ offering for use in the wider market rather than a TTI/TTN specific offering you may well be wise to ask similar questions on other Fora and of other providers support teams, as I can see how differences may arise. As noted things like the use of SF9 for RX2 is very much a TTI/TTN option (though one also used/offered by many others) with the reasons for selection of both SF and specific Freq (for Eu) much discussed on the Forum previously (search is your friend ![]() ). There is a clear reasoning and logic to the TTI/TTN selection, but others may have a different view/logic.

). There is a clear reasoning and logic to the TTI/TTN selection, but others may have a different view/logic. ![]()

Very clear! The goal is definitely a fully-fledged normal use of Rx1 and Rx2, but it’s great if I can expect all downlinks in the Rx1 window, because I can then configure one radio for continuous listening on the Rx1 frequency & DR and debug the other that is behaving poorly…

Only if the gateway has airtime left at the RX1 frequency. In environments where with a lot of downlink traffic (for instance workshops or where people are developing) expect RX2 to be used regularly.

You can not expect them all to be in RX1.

Most nodes have only one radio (and you need just one) that is idle until just before RX1 opens, listens for the sync word at RX1 frequency and SF and if it doesn’t find it goes to idle until just before RX2 and listens for the sync word on the RX2 frequency and SF.

Having a continues listening radio for a Class A device is a battery drain. And for class C you need to constantly listen to the RX2 frequency and DR if not at the RX1 window. (So only switch to RX1 frequency and DR just before RX1 opens and switch back as soon as its clear there is no sync word within the expected window at those setting)

Keep in mind a listening radio consumers multiple mAs when an idle ones consumption is in the uAs.

It’s all for debugging! So I can confirm packets are actually passing by ![]() (and so I can see how many are passing by)

(and so I can see how many are passing by)

please ensure fup

Apart from the OP’s demonstrable experience from his activity and therefore the likelihood is that this is a redundant point to make, there has to be some latitude when it comes to developing a new stack, particularly when working to make that new stack compliant because it’s not at present, not due to the fault of the OP.

Also, how do you know FUP applies? TTS OS exists as do Discovery instances …

sorry did not realize that there is latitude when you are developing your own stack

or is the current ttn stack not compliant

Huh??

Given that the CTO is on the spec writing committees of the LA, how could you think this possible.

Stack generally refers to the firmware for a device.

A nice compact OS stack that is bare-metal & Arduino’ised and supports SX126X radios - nom, nom, nom. Some testing required still.

huh

he developing a new stack but it is not compliment not due to his own fault so who stack is it

As it so happens I stumbled upon some code flying around on the World Wide Web, and I was so curious as to give it a go and it was an experience to say the least. The developer does not have any particular LW knowledge, more general RF, so I seized the opportunity to learn about LW myself and contribute to the project.

Any Harry Potter fan here will probably get the idea… ![]()