But not the “The next backend release will make it work for devices that don’t receive downlink from the application layer”, right? If it is, please see ADR - not what I expected.

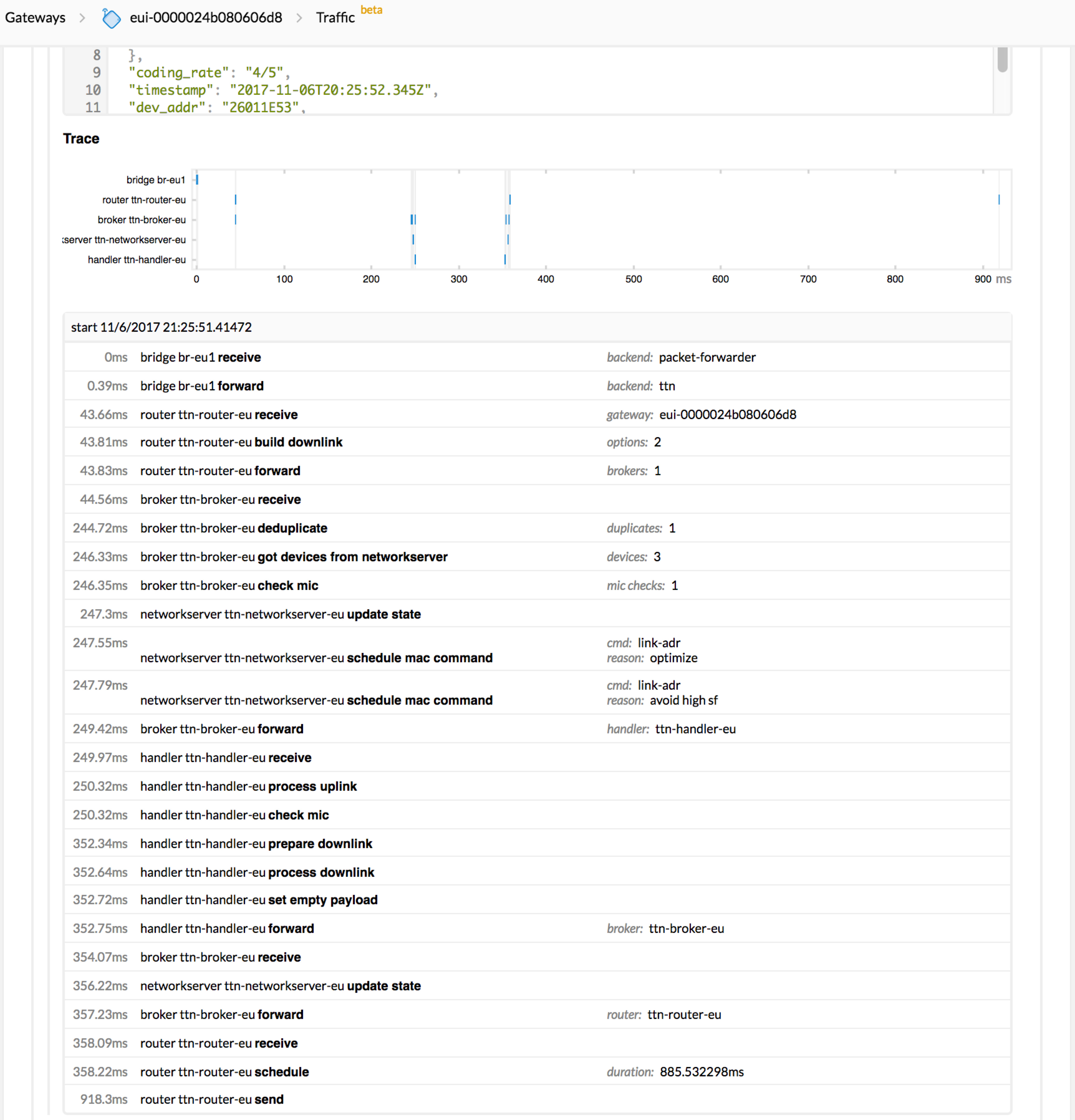

look at the “trace” of the downlink messages in the gateway view

Indeed, nice; see this screenshot of the trace of the ADR downlink:

(Though I didn’t see it when the node does not respond to SF9 in RX2, and TTN then repeats the ADR in SF12.)